Efficient practices to reduce data centre heat loads

By Staff Writer 9 December 2011 | Categories: sponsored content

Existing data centres have had three major operational and fiscal constraints - power, cooling and space. As existing data centres are required to support increasingly dense configurations, power and cooling requirements can outstrip the capabilities of the data centre infrastructures. In fact, the issue of space becomes moot because existing data centres are projected to run out of power before they run out of space.

HP understands that individual data centre challenges, such as rack and processor-level power and cooling, cannot be viewed and managed as disconnected issues. To this end, HP has developed solutions to the fundamental issues of power and cooling at the processor, server, rack, and facility infrastructure level. The company has also created proven management tools to provide a unified approach to managing power and cooling in data centres.

Efficient practices for facility-level power and cooling

In the past when data centres mainly housed large mainframe computers, power and cooling design criteria were designated in average wattage per unit area (W/ft2 or W/m2) and British Thermal Units per hour (BTU/hr), respectively. These design criteria were based on the assumption that power and cooling requirements were uniform across the entire data centre. Today, IT managers are populating data centres with a heterogeneous mix of high-density hardware as they try to extend the life of their existing space, making it important to understand power density distributions across the facility.

Airflow distribution for high-density data centres

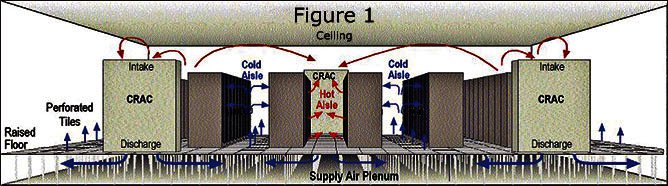

The front-to-rear airflow through HP equipment allows racks to be arranged in rows front-to-front and back-to-back to form alternating hot and cold aisles. The equipment draws in cold supply air from the front and exhausts warm air out the rear of the rack into hot aisles (Figure 1 see below). Most data centres use a downdraft airflow pattern in which air currents are cooled and heated in a continuous convection cycle. The downdraft airflow pattern requires a raised floor configuration that forms an air supply plenum beneath the raised floor. The computer room air conditioning (CRAC) unit draws in warm air from the top, cools the air, and discharges it into the supply plenum beneath the floor. (Figure 1).

To achieve an optimum downdraft airflow pattern, warm exhaust air must be returned to the computer room air conditioning (CRAC) unit with minimal obstruction or redirection. Ideally, the warm exhaust air will rise to the ceiling and return to the CRAC unit intake. Mixing occurs if exhaust air goes into the cold aisles, if cold air goes into the hot aisles, or if there is insufficient ceiling height to allow for separation of the cold and warm air zones. When warm exhaust air mixes with supply air, two things can happen:

- The temperature of the exhaust air decreases, thereby lowering the useable capacity of the CRAC unit.

- The temperature of the supply increases, which causes warmer air to be re-circulated through computer equipment.

Raised floors

Raised floors typically measure 46 cm to 91 cm from the building floor to the top of the floor tiles, which are supported by a grounded grid structure. Ideally, the warm exhaust air rises to the ceiling and returns along the ceiling back to the top of the CRAC units to repeat the cycle.

Air supply plenum

The air supply plenum must be a totally enclosed space to achieve pressurisation for efficient air distribution. The integrity of the subfloor perimeter is critical to prevent moisture retention and to maintain supply plenum pressure. This means that openings in the plenum perimeter and raised floor must be filled or sealed. The plenum is also used to route piping, conduit, and cables that bring power and network connections to the racks. In some data centres, cables are simply laid on the floor in the plenum where they can become badly tangled.

Summary

Data centres are approaching the point of outpacing conventional methods used to power and cool high density computing environments. Escalating energy costs and cooling requirements in existing data centre facilities call for better methodology in the areas of planning and configuration, and more capable analytical and management tools to handle power and cooling demands.

Data centre and facility managers can use best practices to greatly reduce the heat loads. These practices include:

- View data centre and data centre components as a completely integrated infrastructure.

- Assess existing facility power and cooling resources.

- Maximise power and cooling capabilities at the component level.

- Optimise facility for efficient power distribution.

- Institute floor plans and standard practices that maximise rack and aisle cooling.

- Promote highly automated and virtualized data centre operation.

- Manage power and cooling as variable resources that dynamically respond to processing.

- Employ continuous and comprehensive monitoring.

- Choose an integrated approach to data centre hardware, software, applications, network and facility.

Airflow pattern for raised floor configuration with hot aisles and cold aisles.

For more information, please contact Gregory Deane at HP on 082-882-4444, or email gregory.deane@hp.com.

Most Read Articles

Have Your Say

What new tech or developments are you most anticipating this year?