Wikipedia's new AI software, ORES, can immediately detect ‘damaging edits’

By Robin-Leigh Chetty 2 December 2015 | Categories: news

Although sometimes funny, for the most part trolls are the bane of the internet. Their common residence is in the comments section of YouTube, but they have also invaded other areas of the web. Aiming to root them at least from its site is Wikipedia, which recently debuted its new AI software named Objective Revision Evaluation Service. Referred to as ORES for short, this software is able to analyse new Wikipedia revisions and identify anything that looks troll-like.

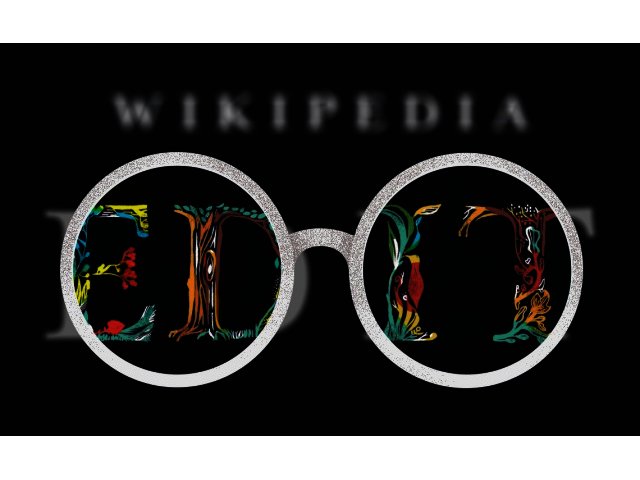

Designed by the Wikimedia Foundation, this software "acts like a pair of x-ray specs since it highlights anything that looks suspicious," according to the company. If it does pick up something, it sets the article revision aside for human editors to evaluate and proof. The Wikimedia team also trained ORES to decipher the difference between human error and "damaging edits" by using Wikipedia's own article quality assessments.

ORES is the first AI tool rolled out to assist the editors and users of Wikipedia, according to the company. As such, we hope ORES can help weed out those pesky trolls (and politicians unethically editing entries) to ensure a bit more credibility for Wikipedia.

Most Read Articles

Have Your Say

What new tech or developments are you most anticipating this year?